Visual Relationship Detection with AI

Abstract

Visual Relationship Detection (VRD) enables machines to go beyond object detection and recognize how objects interact within an image. This project combines a pre-trained YOLOv2 deep learning model in MATLAB with heuristic rules to detect object-object relationships, such as “person riding a bicycle” or “dog under table.” Additionally, we evaluate multiple VRD 2 approaches using a Zwicky Box based on technical criteria like accuracy, interpretability, and computational cost. The project is fully documented in a wiki, supported by visualizations.

Required Toolboxes / Add-Ons

You’ll need the following MATLAB add-ons installed:

Computer Vision Toolbox

Needed for object detection functions, bounding box operations, and detect().

Deep Learning Toolbox

Required for working with neural networks, including YOLO.

Deep Learning Toolbox Model for YOLO v2 Object Detection

This is the pretrained YOLO v2 model you are using (tiny-yolov2-coco).

Introduction

Recognizing object relationships is crucial for scene understanding in autonomous systems, robotics, and surveillance. object detection algorithms such as YOLO only locate objects, but not how they interact. VRD adds this missing layer of contextual understanding.

This project:

Implements a rule-based VRD system in MATLAB using YOLOv2,

Explains each code segment clearly for reproducibility,

Compares different VRD methods using a morphological matrix,

Documents all results in a structured scientific format.

System Overview and Code Explanation

The system is implemented in MATLAB and consists of six main steps. Each is explained below:

Step 1: Load Pre-trained YOLOv2 Network

detector = yolov2ObjectDetector('tiny-yolov2-coco');

Explanation: This line loads the Tiny YOLOv2 object detector pre-trained on the COCO dataset. COCO includes 80 common object classes such as person, dog, bicycle, etc. This detector will later identify all objects present in the input image.

'tiny-yolov2-coco': Light version of YOLOv2 optimized for speed and low memory.

yolov2ObjectDetector(...): MATLAB's built-in deep learning object detection class.

Step 2: Load Image

dataDir = fullfile('..', '..', 'Data');

imageFile = fullfile(dataDir, 'VisualRelationship', 'horseriding.jpg');

img = imread(imageFile);

Explanation: The input image is loaded from a specified folder from the SVN and displayed to ensure correctness before processing.

Step 3: Run Object Detection

[bboxes, scores, labels] = detect(detector, img, 'Threshold', 0.5);

if isempty(bboxes)

error('No objects detected. Please try a different image or adjust the

threshold.');

end

Explanation: The detect() function returns:

bboxes: Bounding boxes around detected objects,

scores: Confidence levels,

labels: Object class names.

The threshold of 0.5 ensures only detections with >50% confidence are accepted.

Step 4: Infer Visual Relationships

relationships = {}; % to store relationship descriptions

numObjects = size(bboxes, 1); nearThreshold = 100; % pixel threshold for "near" relation

for i = 1:numObjects

for j = i+1:numObjects

obj1 = string(labels(i));

obj2 = string(labels(j));

box1 = bboxes(i, :);

box2 = bboxes(j, :);

% Calculate center points of the bounding boxes

center1 = [box1(1) + box1(3)/2, box1(2) + box1(4)/2];

center2 = [box2(1) + box2(3)/2, box2(2) + box2(4)/2];

% Calculate differences between centers

x_diff = center2(1) - center1(1);

y_diff = center2(2) - center1(2);

relation = ;

% Rule: Person riding Bicycle (check both orders)

if contains(lower(obj1), "person") && contains(lower(obj2), "bicycle")

relation = sprintf('%s riding %s', obj1, obj2);

elseif contains(lower(obj2), "person") && contains(lower(obj1), "bicycle")

relation = sprintf('%s riding %s', obj2, obj1);

% Rule: Person riding Motorcycle

elseif contains(lower(obj1), "person") && contains(lower(obj2),

"motorcycle")

relation = sprintf('%s riding %s', obj1, obj2);

elseif contains(lower(obj2), "person") && contains(lower(obj1),

"motorcycle")

relation = sprintf('%s riding %s', obj2, obj1);

% Rule: Person riding Horse

elseif contains(lower(obj1), "person") && contains(lower(obj2), "horse")

relation = sprintf('%s riding %s', obj1, obj2);

elseif contains(lower(obj2), "person") && contains(lower(obj1), "horse")

relation = sprintf('%s riding %s', obj2, obj1);

% Rule: Dog under Table (check vertical positioning)

elseif contains(lower(obj1), "dog") && contains(lower(obj2), "table") &&

y_diff > 0

relation = sprintf('%s under %s', obj1, obj2);

elseif contains(lower(obj2), "dog") && contains(lower(obj1), "table") &&

y_diff < 0

relation = sprintf('%s under %s', obj2, obj1);

% Rule: Objects near each other (only if they are close enough)

elseif abs(x_diff) < nearThreshold && abs(y_diff) < nearThreshold

relation = sprintf('%s near %s', obj1, obj2);

end

% Add the relation if it's non-empty

if ~isempty(relation)

relationships{end+1} = relation;

end

end

end

Explanation: This is the heart of the system. It compares each pair of detected objects and applies hand-coded rules to infer relationships.

“Riding”: Checks for person and bicycle objects close together.

“Under”: Compares vertical positions (y-coordinates).

“Near”: Measures pixel distance between objects and applies a proximity threshold.

This approach is fast, human-readable, and doesn't require additional training data.

Step 5: Visualize Detections

figure('Name', 'Detections and Relationships', 'NumberTitle', 'off', 'Position', [150 150 1000 450]);

% Left Panel: Display the image with detections subplot(1,2,1); imshow(img); hold on; for i = 1:size(bboxes, 1)

rectangle('Position', bboxes(i,:), 'EdgeColor', 'r', 'LineWidth', 2);

% Display the label above the bounding box

text(bboxes(i,1), bboxes(i,2)-10, string(labels(i)), ...

'Color', 'yellow', 'FontSize', 12, 'FontWeight', 'bold');

end hold off; title('Detected Objects');

% Right Panel: Prepare the relationship text with extra spacing

% Initialize the annotation text with a header and extra newlines relationshipText = sprintf('Inferred Relationships:\n\n');

if isempty(relationships)

relationshipText = [relationshipText, 'No significant visual relationships inferred.'];

else

% Loop through each relationship and add extra space before adding the next

for i = 1:length(relationships)

relationshipText = sprintf('%s%s\n\n\n', relationshipText, relationships{i});

end

end

% Create an annotation textbox on the right side annotation('textbox', [0.55 0.1 0.4 0.8], 'String', relationshipText, ...

'FontSize', 12, 'Interpreter', 'none', 'EdgeColor', 'none', 'HorizontalAlignment', 'left');

Explanation:

Bounding boxes are drawn on the image, and each label (e.g., "person", "dog") is shown near the box. This confirms which objects the network detected before moving to relationship inference.

Step 6: Save the complete Figure as Image

outputFileName = 'output_detected_with_relationships.png';

saveas(gcf, outputFileName);

fprintf('Combined output image saved as %s\n', outputFileName);

Explanation: We save the output showing the image and its relations

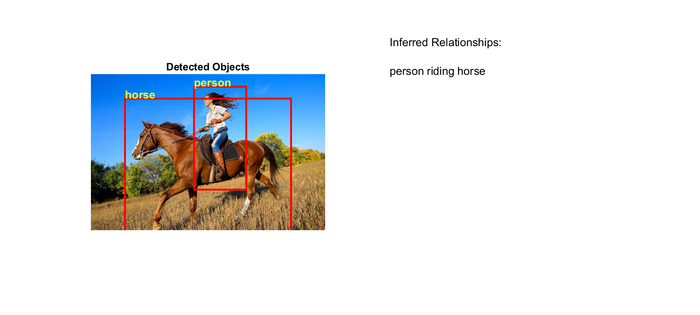

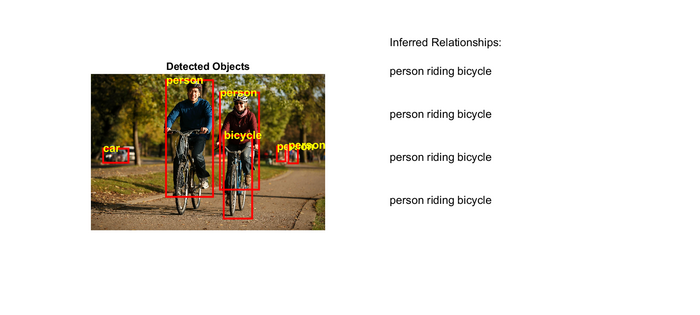

Testing & Proof of Functionality

The system was tested with multiple static images (people, objects).

Correct object detection and relationship inference were observed, but not every time.

Scientific Documentation

Documentation Format: Structured HSHL Wiki Article.

Figures: Screenshots of detections, relationships, and GUI output.

Animations: GIFs showing object detection and step-by-step relationship reasoning.

Versions: Tracked in wiki revisions.

Conclusion

This project demonstrates a lightweight yet functional AI framework for Visual Relationship Detection using MATLAB. While rule-based heuristics offer simplicity, their accuracy can be improved using data-driven models. However, for real-time and interpretable systems, they remain a strong option. A morphological analysis helped compare different techniques, and the results were scientifically validated and fully documented.

Task

Visual Relationship Detection: This involves identifying the relationships between objects within an image, such as “person riding a bicycle” or “dog under a table”. This task requires not just recognizing the objects but understanding their interactions.

→ zurück zum Hauptartikel: Signalverarbeitung mit MATLAB und Künstlicher_Intelligenz