Load and Retrain a Pretrained NN with a MATLAB GUI

Discrete Values vs. GUI

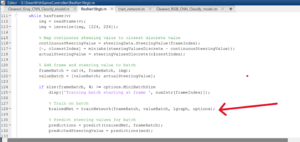

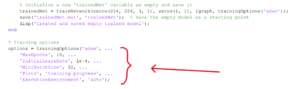

This task was about loading the pretrained NN with MATLAB® App (GUI) by clicking the desired path in the images. This method is the same method used in JupyterLab, but alternatively, since I already have steering angles with their corresponding frames, we can train a model to predict a steering angle based on new frames.

Pretrained Neural Network

A pretrained neural network is a model that’s already been trained on a large dataset for a general task (e.g., image classification on ImageNet). You can fine-tune it on your smaller, task-specific dataset—leveraging learned features to save time and improve performance.

Types of Pretrained NN

| Name | Examples | Description |

|---|---|---|

| Image Classification |

|

Pretrained NN for image classification tasks. |

| Object Detection |

|

Pretrained models for detecting and localizing objects. |

| Semantic Segmentation |

|

Pretrained NN to label each pixel in an image. |

| Super-Resolution |

|

Pretrained NN for upscaling low-resolution images. |

| Image Denoising |

|

Pretrained NN for removing noise from images. |

| Image-to-Image Translation |

|

Pretrained NN for tasks like style transfer and domain adaptation. |

| Instance Segmentation |

|

NN for detecting and segmenting individual object instances. |

All the above models can be downloaded from here [1].

How to Load, Retrain, and Fine-Tune a Pretrained NN

MATLAB has an integrated toolbox to run and test deep learning. ResNet18 is a pre-trained network we will be using to train our AI and then fine tune it.

- Load: Loading a pretrained neural network means importing its saved architecture and learned weights into MATLAB’s workspace. This gives you a ready-to-use model without training from scratch [[2]][[3]].

- Retrain: Retraining involves further training the entire loaded network on your own dataset. All layers’ weights are updated, often starting from the pretrained values [[4]][[5]].

- Finetune: Fine-tuning freezes most of the network’s layers and only updates a subset (typically higher-level layers) on new data. You use a lower learning rate so the pretrained features aren’t drastically altered [[6]][[7]].