AMR: Autonome Navigation mit einem JetBot ROS AI Roboter

| Autor: | Neaz Mahmud |

| Art: | Projektarbeit |

| Modul: | Project Work (ELE-B-2-7.02) |

| Dauer: | 09.10.2023 - xx.yy.2024 |

| Arbeitszeit: | TBD |

| Betreuer: | Prof. Dr.-Ing. Schneider |

| Prüfungsform: | Modulabschlussprüfung als Hausarbeit (Praxisbericht, Umfang 10-50 Seiten) und mündliche Prüfungsleistung (Präsentation, 15 Minuten) |

| Mitarbeiter: | Marc Ebmeyer, Tel. 847 |

Einführung

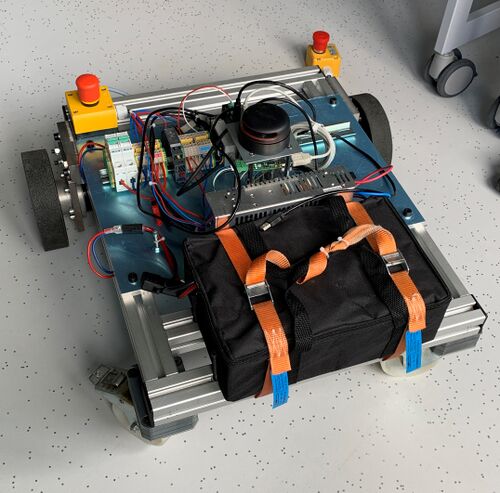

Seit über 2 Jahren arbeiten wir mit der Arbeitsgruppe Robotik und autonome Systeme an der autonomen Navigation eines Fahrerlosen Transportfahrzeugs (FTF, s. Abb. 1) mit der Firma Hanning zusammen. Für die Weiterentwicklung benötigen wir studentische Hilfe.

Aufgabenstellung

- Einarbeitung in das bestehende System

- Überprüfung und Verbesserung der bestehenden Dokumentation

- Ansteuerung des FTS

- Geregelte autonome Indoor Navigation des FTF

- Vermessung und Bewertung der Güte der Fahrt mit dem bestehenden Referenzsystem.

- Test und wiss. Dokumentation im HSHL-Wiki

Anforderungen

Das Projekt erfordert Vorwissen in den nachfolgenden Themengebieten. Sollten Sie die Anforderungen nicht erfüllen müssen Sie sich diese Kenntnisse anhand im Rahmen der Arbeit anhand von Literatur/Online-Kursen selbst aneignen.

- Umgang mit Linux

- C-Programmierung

- Dokumentenversionierung mit SVN

- Optional: Simulation mit WeBots

- Optional: Umgang mit ROS2

- Optional: Partikel Filter SLAM

Anforderungen an die wissenschaftliche Arbeit

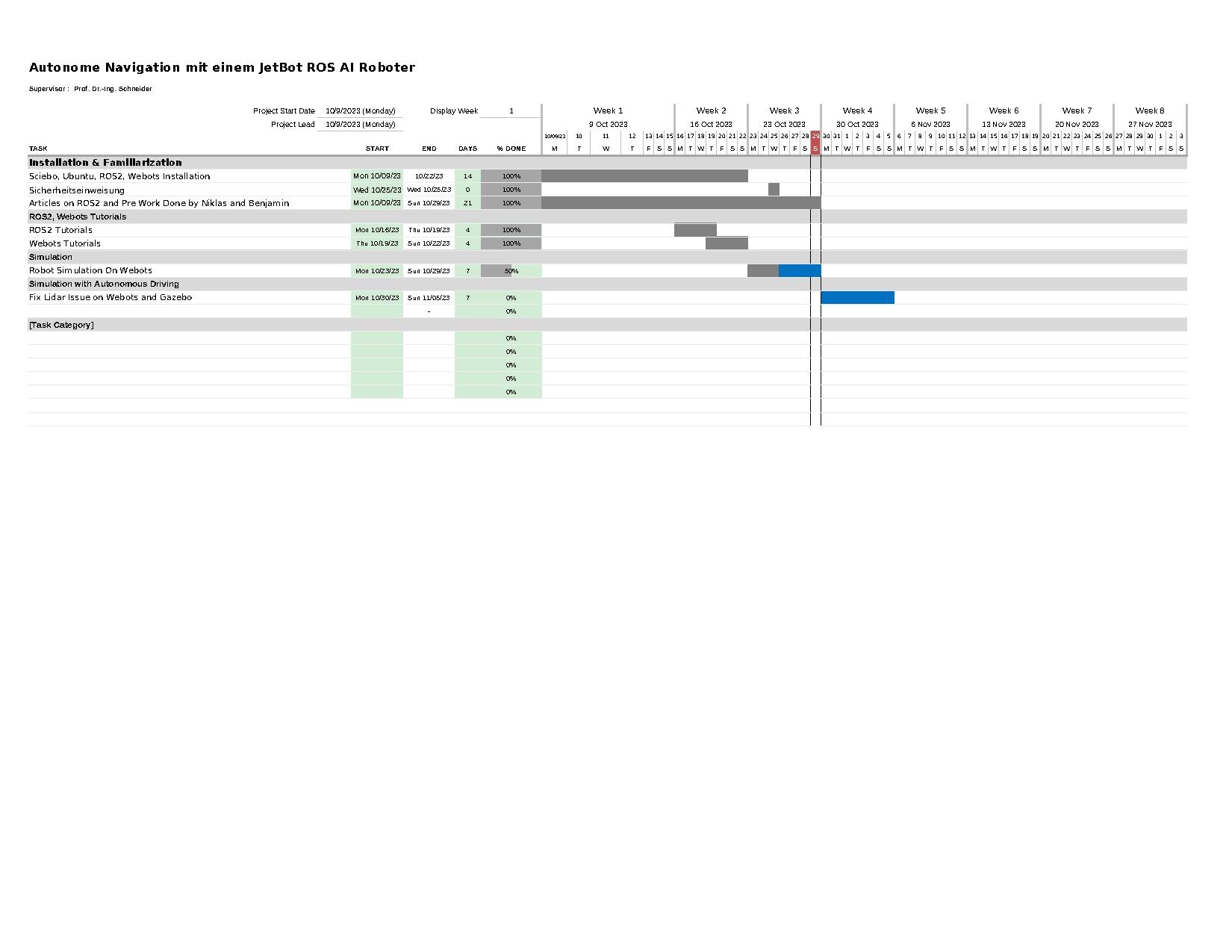

- Wissenschaftliche Vorgehensweise (Projektplan, etc.), nützlicher Artikel: Gantt Diagramm erstellen

- Wöchentlicher Fortschrittsberichte (informativ), aktualisieren Sie das Besprechungsprotokoll - Live Gespräch mit Prof. Schneider

- Projektvorstellung im Wiki

- Tägliche Sicherung der Arbeitsergebnisse in SVN

- Tägliche Dokumentation der geleisteten Arbeitsstunden

- Studentische Arbeiten bei Prof. Schneider

- Anforderungen an eine wissenschaftlich Arbeit

Getting Started

- Praxissemester von N. Heiber

- Projektarbeit von B. Dilly

- Lesen Sie die ROS2-Tutorials

- Installation von Linux und ROS2 [1]

Softwarestand

Verwenden Sie ausschließlich diese Software:

- Ubuntu: 22.04.3 LTS

- ROS2: Humble Hawksbill

- Gazebo: 11.10.2

- Rviz2: 11.2.8-1

Summary

This project aims to implement autonomous navigation capabilities in a JetBot robotic platform using ROS2 (Robot Operating System). The JetBot will be equipped with a Lidar sensor to perceive its surroundings, allowing it to generate detailed maps using SLAM (Simultaneous Localization and Mapping) techniques. Additionally, the robot will utilize odometry data to refine its positional awareness within the mapped environment.

The core objectives of the project include:

Lidar-Based Mapping: Integration of a Lidar sensor for real-time environmental sensing, enabling the JetBot to generate accurate and up-to-date maps of its surroundings.

SLAM Implementation: Employing SLAM algorithms to simultaneously map the environment and localize the JetBot within it, ensuring adaptability to unknown or dynamic spaces.

Odometry for Localization: Utilizing odometry data to enhance the robot's localization accuracy, particularly in scenarios where Lidar data may be limited or noisy.

ROS Integration: Implementing and optimizing these functionalities within the ROS framework, leveraging its extensive libraries and tools for seamless communication and coordination between different components.

Autonomous Guidance: Developing algorithms and control systems that enable the JetBot to autonomously navigate through indoor environments, avoiding obstacles and optimizing its path based on the mapped environment.

Installation of Softwares and Necessary Packages

For developement of this project, we will be working on Linux OS (Ubuntu: 22.04.3 LTS). The installation procedure can be followed from the following link Ubuntu.

The next step will be installing ROS2 on our system.This can be done following the instructions on this page ROS2 Humble

We can either use Webots or Gazebo for simulation. I will be using Gazebo for this project and this can be installed from Gazebo

We will also need nav2 stack for our project. Run the following lines on command panel in order to install the Nav2 packages "sudo apt install ros-humble-navigation2" and then "sudo apt install ros-humble-nav2-bringup". Also install the turtlebot3 packages by running "sudo apt install ros-humble-turtlebot3-gazebo" on command panel. The following example Nav2 Examplecan be run in order to check if everything is properly installed.

We will also need the following packages. Simply run the following lines on command panel.

sudo apt-get install ros-humble-nav2-costmap-2d sudo apt-get install ros-humble-nav2-core sudo apt-get install ros-humble-nav2-behaviors sudo apt-get install ros-humble-robot-localization sudo apt-get install ros-humble-gazebo-ros-pkgs sudo apt-get install ros-humble-turtlebot3-msgs sudo apt-get install ros-humble-nav2-map-server sudo apt install ros-humble-nav2-bringup

Starting With The Simulation

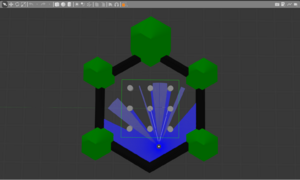

For starters, we will need an environment (World) and robot model for simulation. One of the example worlds provided on ROBOTIS-GIT can be used. Or one can create own world for simualtion.

For robot model we will use turtlebot3_waffle_pi model Waffle_pi.

To start the simulation "ros2 launch turtlebot3_gazebo turtlebot3_world.launch.py" has to be run on command panel. Run "export TURTLEBOT3_MODEL=waffle" at first This will open the world with robot model on Gazebo.

"ros2 run teleop_twist_keyboard teleop_twist_keyboard" can be used to drive around the robot inside the environment.

Mapping

For mapping of the surrounding environment This file was created. Running "ros2 launch navigation_tb3 mapping.launch.py" will start publishing /odom /scan /cmd_vel topics. On another terminal run "rviz2" will open rviz. Now we can drive around the robot with help of teleop twist keyboard and map the area.

This map can be saved by "ros2 run nav2_map_server map_saver_cli -f tb3_map" command.

Autonomous Driving

The map generated earlier now can be used drive the robot autonomously avoiding the obstacles and driving it to specific points. This Navigation code was written in order to acheive that. This Launch file will contains all the necessary packages and will open Gazebo and Rviz2 on window. Run "source install/setup.bash" and then launch "ros2 launch navigation_tb3 navigation.launch.py" Here is a short video of the robot guiding through the map.

Project Plan

==

→ zurück zum Hauptartikel: Studentische Arbeiten