YOLO Model for Object Detection

YOLO Model for LEGO Parts Detection

The LEGO Parts Detection System is a computer vision application designed to automatically identify and classify specific LEGO bricks within images. This implementation utilizes the YOLO11 (You Only Look Once, version 11) architecture from the Ultralytics library. The model is trained on a custom dataset of approximately 400 images using Google Colab and NVIDIA GPU acceleration.

Overview

Object detection involves locating instances of objects of certain classes within an image. Unlike standard image classification (which assigns a single label to an image), this YOLO model predicts:

- Bounding Boxes: The spatial coordinates of the LEGO part.

- Class Probabilities: The specific type of LEGO brick (e.g., "2x4 Brick", "Technic Pin").

The system uses the `yolo11s` (Small) model variant, optimized for a balance between inference speed and detection accuracy, making it suitable for real-time applications.

Dataset Preparation

The performance of the model relies on a curated dataset processed through the following pipeline:

Data Collection and Annotation

- Source: 400 images containing target LEGO parts in various orientations, lighting conditions, and backgrounds.

- Annotation Tool: Label Studio.

- Label Format: YOLO standard format, where each image has a corresponding `.txt` file containing lines in the format:

<class_id> <x_center> <y_center> <width> <height> All coordinates are normalized between 0 and 1.

Preprocessing

Before training, the dataset is split to ensure robust evaluation:

- Training Set: 90% of images (used to update model weights).

- Validation Set: 10% of images (used to evaluate performance during training).

- Configuration: A `data.yaml` file is generated dynamically to map the directory paths and class names for the training engine.

Network Architecture

The YOLO11 architecture is a single-stage object detector. It processes the entire image in a single forward pass, distinguishing it from two-stage detectors like R-CNN. The architecture consists of three main components:

| Component | Function | Description |

|---|---|---|

| Backbone | Feature Extraction | A Convolutional Neural Network (based on CSPDarknet) that downsamples the image to extract distinct features (edges, textures, shapes) at different scales. It utilizes C3k2 blocks (Cross Stage Partial networks with specific kernel sizes) to improve gradient flow and reduce computational cost. |

| Neck | Feature Fusion | Uses PANet (Path Aggregation Network) layers to combine features from different backbone levels. This ensures that the model can detect both large (close-up) and small (distant) LEGO parts effectively. |

| Head | Prediction | A decoupled head that separates the classification task (what is it?) from the regression task (where is it?). It outputs the final bounding boxes and class scores. |

Mathematical Description of Core Operations

SiLU Activation Function

The hidden layers of the network use the Sigmoid Linear Unit (SiLU) activation function. It allows for smoother gradient propagation compared to the traditional ReLU.

Intersection over Union (IoU)

To measure how well a predicted box overlaps with the ground truth box during training, the Intersection over Union metric is used:

Where is the predicted bounding box and is the ground truth box.

Loss Function

The model optimizes a composite loss function that combines three distinct error measurements:

- Box Loss (): Measures the error in the coordinate predictions. YOLO11 typically uses CIoU (Complete IoU) loss, which accounts for overlap, center point distance, and aspect ratio consistency.

- Class Loss (): Measures the error in classification using Binary Cross Entropy (BCE):

- DFL Loss (): Distribution Focal Loss, used to refine the localization of the bounding box boundaries.

Non-Maximum Suppression (NMS)

During inference, the model may predict multiple overlapping boxes for a single LEGO part. NMS filters these to keep only the best prediction.

- Select the box with the highest confidence score.

- Calculate IoU between this box and all other boxes.

- Discard boxes with an IoU threshold higher than a set limit (e.g., 0.5).

Implementation

Training Configuration

The model is trained using the Python SDK. The training process runs for 60 epochs with an image size of 640 pixels.

!yolo detect train \

data=/content/data.yaml \

model=yolo11s.pt \

epochs=60 \

imgsz=640

Inference

Once trained, the best weights (`best.pt`) are used to predict classes on new images.

!yolo detect predict \

model=runs/detect/train/weights/best.pt \

source=data/validation/images \

save=True

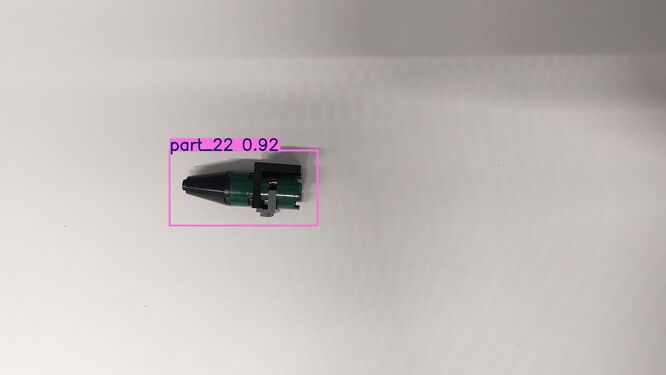

Evaluation and Results

Upon completion of the training phase, the model's performance is qualitatively evaluated by running inference on unseen images from the validation set. The output consists of the original input images overlaid with prediction annotations.