Autonomous Driving Analysis, sumarry And Conclusion

Materials and Setup

- Jetson Nano Developer Kit

- USB Gamepad

- MATLAB® 2020B (compatible with CUDA 10.2) [1]

- Wi-Fi connection between Jetson Nano and host PC

Experiment Design and Requirements

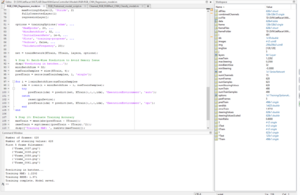

- MATLAB® was used to connect to the Jetson Nano via SSH.

- The Gamepad receiver had to be physically connected to the Jetson Nano.

- MATLAB® controlled the Gamepad indirectly using SSH commands to access libraries on the Jetson.

- Data such as images and steering values were collected and transferred via Wi-Fi.

[[File:matlab_ssh_code

.jpg|frame|Fig. 3: Ssh code snipped to connect using MATLAB and JetRacer]]

Experiment Procedure

Step 1: System Connection and Gamepad Control

Since MATLAB® could not directly access the Gamepad receiver, an SSH command method was developed to control the Jetson Nano libraries from the background without delay or signal loss.

Step 2: Data Collection

- Data was collected using the integrated camera at 256×256 pixel resolution.

- Real-time steering commands were logged and synchronized with images.

- To reduce image distortion, camera calibration was performed using a calibration256by256.mat parameter.

[[File:

.png|frame|Fig. 6: Camera setup for image with calibration]]

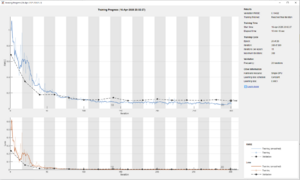

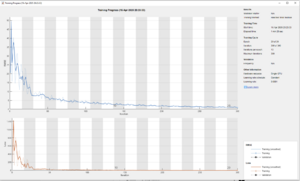

Step 3: Neural Network Training

- Initially, a pretrained network was used with RGB images, but results were poor. with the following parameters:

- Regression methods were tried but also showed poor performance. with the following parameters:

- The project switched to **classification** using **discrete steering values**. with the following parameters:

- MATLAB® Apps (GUI) were used to manually annotate images by clicking the correct path.

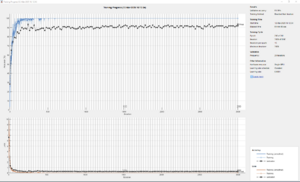

Step 4: System Optimization

- Lane tracking algorithms were integrated to automatically label lane positions.

- Additional features like lane angles were extracted to improve model inputs.

- Grayscale image recordings were used to achieve better generalization and faster processing.

- Still using **classification**. with the following parameters:

[[File:

.png|frame|Fig. 13: Camera setup for image recording during driving]]

Experiment Analysis

Training Results

- Models trained on discrete steering values showed significantly better results than regression-based approaches.

- Grayscale recordings increased model stability.

- Calibrated images helped reduce the steering error caused by lens distortion.

Limitations

- Hardware constraints limited the maximum processing speed.

- Initial data quality was poor due to randomized manual steering input.

- Better results were obtained after switching to discrete, consistent control signals.

- However, simultaneous recording, steering, and saving caused performance bottlenecks due to the Jetson Nano’s limited resources.

Summary and Outlook

This project demonstrated that it is possible to optimize autonomous navigation on the JetRacer using a combination of classical control algorithms and neural networks. Despite hardware limitations, stable autonomous driving behavior was achieved.

Future improvements could include:

- Expanding the dataset with more diverse environmental conditions.

- Flash working Model directly on jetson nano using gpu coder

- Use accurate gamepad and use more complex training method.

- Improving automated labeling and refining the PD controller parameters for faster driving without loss of robustness.