Autonomous Driving Analysis, sumarry And Conclusion: Unterschied zwischen den Versionen

Zur Navigation springen

Zur Suche springen

Keine Bearbeitungszusammenfassung |

Keine Bearbeitungszusammenfassung |

||

| (10 dazwischenliegende Versionen desselben Benutzers werden nicht angezeigt) | |||

| Zeile 1: | Zeile 1: | ||

== Autonomous Driving Neural Network Training == | == Autonomous Driving Neural Network Training == | ||

== | === Training Results === | ||

* Models trained on discrete steering values showed significantly better results than regression-based approaches. | * Models trained on discrete steering values showed significantly better results than regression-based approaches. | ||

* Grayscale recordings increased model stability. | * Grayscale recordings increased model stability. | ||

* Calibrated images helped reduce the steering error caused by lens distortion. | * Calibrated images helped reduce the steering error caused by lens distortion. | ||

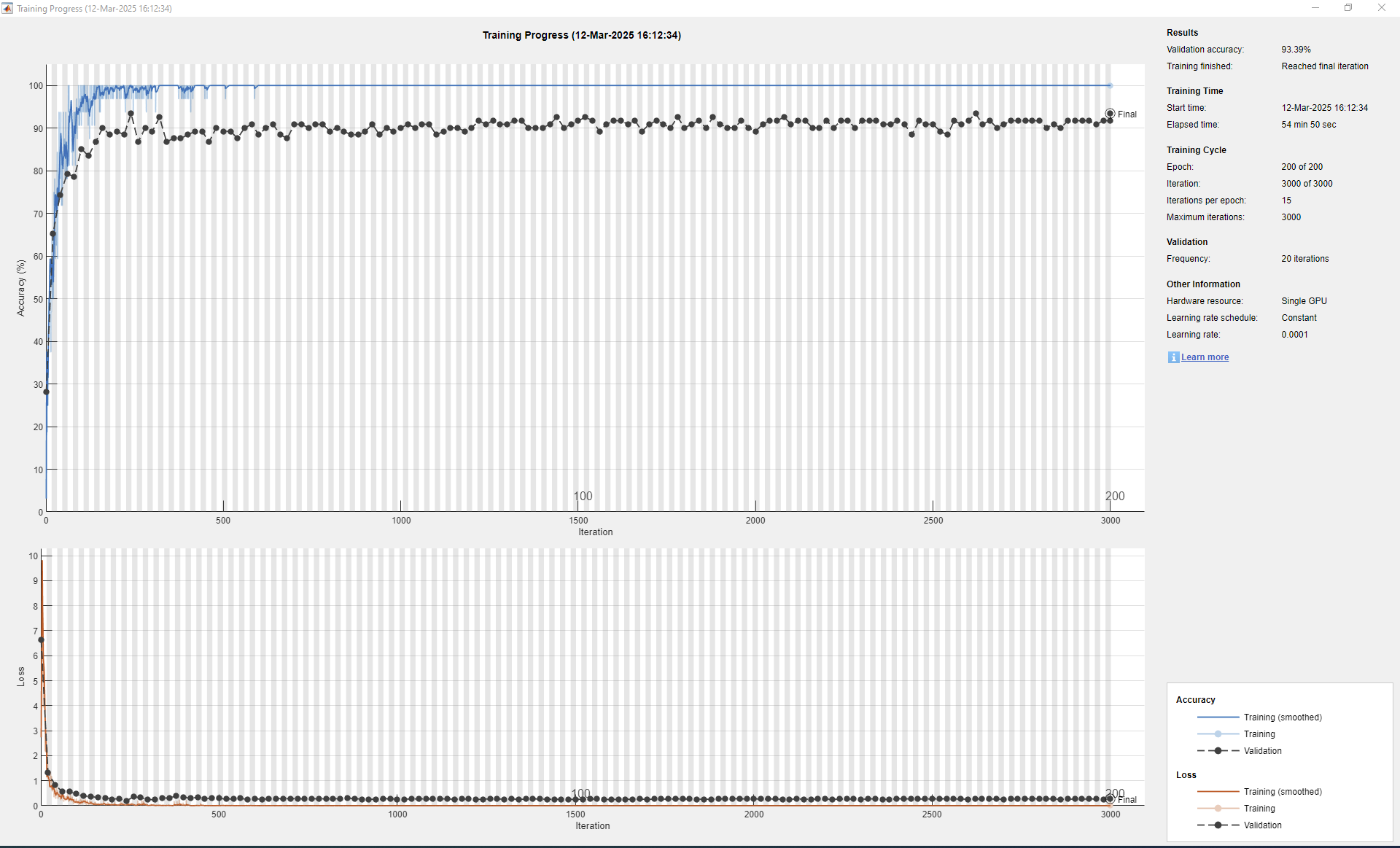

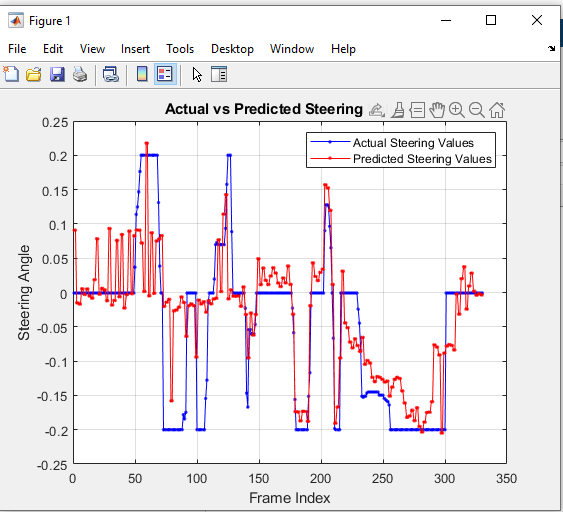

* The project switched to **classification** using **discrete steering values**. with the following parameters as seen on the figure below: | |||

[[Datei:Cleaned CNN Classify.png||Fig. 38: Classification Training]] | |||

[[Datei:regression.png||Fig. 39: Classification Result]] | |||

More details of further work on autonomous driving can be found here[https://wiki.hshl.de/wiki/index.php/JetRacer:_Autonomous_Driving,_Obstacle_Detection_and_Avoidance_using_AI_with_MATLAB]. | |||

== Experiment Analysis == | |||

| Zeile 9: | Zeile 18: | ||

=== Limitations === | === Limitations === | ||

* Hardware constraints limited the maximum processing speed. | * Hardware constraints limited the maximum processing speed (without GPU Coder). | ||

* The camera did not allow 50 fps, and the recording dimension was limited to 256 x 256. | |||

* Initial data quality was poor due to randomized manual steering input. | * Initial data quality was poor due to randomized manual steering input. | ||

* Better results were obtained after switching to discrete, consistent control signals. | * Better results were obtained after switching to discrete, consistent control signals. | ||

* However, simultaneous recording, steering, and saving caused performance bottlenecks due to the Jetson Nano’s limited resources. | * However, simultaneous recording, steering, and saving caused performance bottlenecks due to the Jetson Nano’s limited resources. | ||

Note: I have provided alternatives and solutions to the limitations above and could not implement them myself due to time constraints. | |||

== Summary and Outlook == | == Summary and Outlook == | ||

This | This experiment aimed to use the Jetson Nano and Matlab to complete various tasks, from "Familiarization with the existing framework" to "Autonomous Driving." Although the final result of the car driving several laps in the right lane could not be attained, there is plenty of room for future improvements as far as: | ||

* Training model method with (more data, different training algorithms like reinforcement learning, etc.) | |||

* Expanding the dataset with more diverse environmental conditions. | * Expanding the dataset with more diverse environmental conditions. | ||

* Flash working | * Flash working model directly on Jetson Nano using GPU Coder. | ||

* Use accurate gamepad | * Use an accurate gamepad. | ||

* Improving automated labeling and refining the PD controller parameters for faster driving without loss of robustness. | * Improving automated labeling and refining the PD controller parameters for faster driving without loss of robustness. | ||

Aktuelle Version vom 12. Juni 2025, 22:36 Uhr

Autonomous Driving Neural Network Training

Training Results

- Models trained on discrete steering values showed significantly better results than regression-based approaches.

- Grayscale recordings increased model stability.

- Calibrated images helped reduce the steering error caused by lens distortion.

- The project switched to **classification** using **discrete steering values**. with the following parameters as seen on the figure below:

More details of further work on autonomous driving can be found here[1].

Experiment Analysis

Limitations

- Hardware constraints limited the maximum processing speed (without GPU Coder).

- The camera did not allow 50 fps, and the recording dimension was limited to 256 x 256.

- Initial data quality was poor due to randomized manual steering input.

- Better results were obtained after switching to discrete, consistent control signals.

- However, simultaneous recording, steering, and saving caused performance bottlenecks due to the Jetson Nano’s limited resources.

Note: I have provided alternatives and solutions to the limitations above and could not implement them myself due to time constraints.

Summary and Outlook

This experiment aimed to use the Jetson Nano and Matlab to complete various tasks, from "Familiarization with the existing framework" to "Autonomous Driving." Although the final result of the car driving several laps in the right lane could not be attained, there is plenty of room for future improvements as far as:

- Training model method with (more data, different training algorithms like reinforcement learning, etc.)

- Expanding the dataset with more diverse environmental conditions.

- Flash working model directly on Jetson Nano using GPU Coder.

- Use an accurate gamepad.

- Improving automated labeling and refining the PD controller parameters for faster driving without loss of robustness.