Convolutional Neural Network for Image Classification: Unterschied zwischen den Versionen

Keine Bearbeitungszusammenfassung |

|||

| Zeile 8: | Zeile 8: | ||

Convolutional Neural Networks are the most common deep learning models used for computer vision. Unlike fully connected networks that see the image like a long list of numbers, CNNs keep the shape and position of the image parts.<ref>Prinzi, F., Currieri, T., Gaglio, S. and Vitabile, S. (2024) ‘Shallow and deep learning classifiers in medical image analysis’, European Radiology Experimental, 8(1), p. 26. doi:10.1186/s41747-024-00428-2.</ref> | Convolutional Neural Networks are the most common deep learning models used for computer vision. Unlike fully connected networks that see the image like a long list of numbers, CNNs keep the shape and position of the image parts.<ref>Prinzi, F., Currieri, T., Gaglio, S. and Vitabile, S. (2024) ‘Shallow and deep learning classifiers in medical image analysis’, European Radiology Experimental, 8(1), p. 26. doi:10.1186/s41747-024-00428-2.</ref> | ||

== Dataset == | == Dataset == | ||

| Zeile 79: | Zeile 77: | ||

predictions = model.predict(img_array) | predictions = model.predict(img_array) | ||

</source> | </source> | ||

== References == | |||

<references /> | |||

Aktuelle Version vom 10. Februar 2026, 13:05 Uhr

Convolutional Neural Network for Image Classification

A Convolutional Neural Network (CNN) is a class of deep neural networks, most commonly applied to analyzing visual imagery. This article describes a specific implementation of a CNN using the TensorFlow and Keras libraries to classify images from the CIFAR-10 dataset.

Overview

The model described herein is designed to classify low-resolution color images ( pixels) into one of ten distinct classes (e.g., airplanes, automobiles, birds, cats). The implementation utilizes a sequential architecture consisting of a convolutional base for feature extraction followed by a dense network for classification.

Convolutional Neural Networks are the most common deep learning models used for computer vision. Unlike fully connected networks that see the image like a long list of numbers, CNNs keep the shape and position of the image parts.[1]

Dataset

The system is trained on the CIFAR-10 dataset, which consists of 60,000 $32 \times 32$ color images in 10 classes, with 6,000 images per class.

- Training set: 50,000 images

- Test set: 10,000 images

- Preprocessing: Pixel values are normalized to the range [0, 1] by dividing by 255.0 to accelerate convergence during gradient descent.

Network Architecture

The architecture follows a sequential pattern: Conv2D $\rightarrow$ MaxPooling $\rightarrow$ Dense. The specific layer configuration and parameter counts are detailed below:

Model: "sequential"

| Layer (type) | Output Shape | Param # |

|---|---|---|

| conv2d (Conv2D) | (None, 30, 30, 32) | 896 |

| max_pooling2d (MaxPooling2D) | (None, 15, 15, 32) | 0 |

| conv2d_1 (Conv2D) | (None, 13, 13, 64) | 18,496 |

| max_pooling2d_1 (MaxPooling2D) | (None, 6, 6, 64) | 0 |

| conv2d_2 (Conv2D) | (None, 4, 4, 64) | 36,928 |

| flatten (Flatten) | (None, 1024) | 0 |

| dense (Dense) | (None, 64) | 65,600 |

| dense_1 (Dense) | (None, 10) | 650 |

| Total params: 122,570 (478.79 KB) | ||

| Trainable params: 122,570 (478.79 KB) | ||

| Non-trainable params: 0 (0.00 B) | ||

Implementation Details

Training Configuration

The model is compiled with the following hyperparameters:

- Optimizer: Adam (Adaptive Moment Estimation).

- Loss Function: Sparse Categorical Crossentropy (from_logits=True).

- Metrics: Accuracy.

- Epochs: 10 iterations over the entire dataset.

Performance

Upon training for 10 epochs, the model typically achieves:

- Training Accuracy: High (variable based on initialization).

- Test Accuracy: Approximately 70% – 75%.

- Overfitting: A divergence between training accuracy and validation accuracy suggests the model may memorize training data. Techniques such as Dropout or Data Augmentation are recommended to mitigate this.

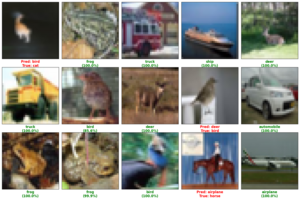

Result

|

|

Inference on Custom Images

To test the model on high-quality external images, the input must be resized to match the network's input constraints ($32 \times 32$ pixels).

img = image.load_img(path, target_size=(32, 32))

img_array = image.img_to_array(img) / 255.0

predictions = model.predict(img_array)

References

- ↑ Prinzi, F., Currieri, T., Gaglio, S. and Vitabile, S. (2024) ‘Shallow and deep learning classifiers in medical image analysis’, European Radiology Experimental, 8(1), p. 26. doi:10.1186/s41747-024-00428-2.